News

- February, 2024 : Accepted at Eurographics 2024!

- February, 2024 : Web launched.

Abstract

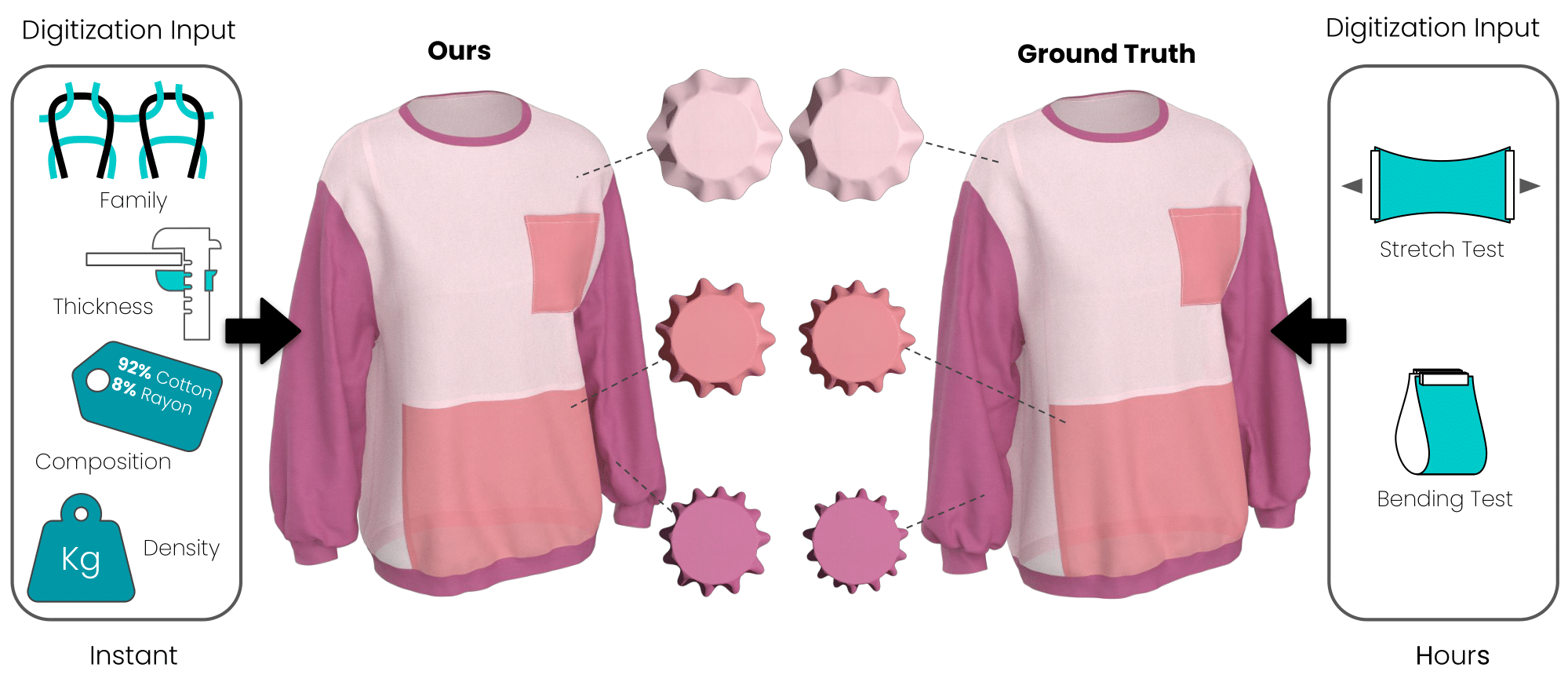

Estimating fabric mechanical properties is crucial to create realistic digital twins. Existing methods typically require testing physical fabric samples with expensive devices or cumbersome capture setups. In this work, we propose a method to estimate fabric mechanics just from known manufacturer metadata such as the fabric family, the density, the composition, and the thickness. Further, to alleviate the need to know the fabric family –which might be ambiguous or unknown for nonspecialists– we propose an end-to-end neural method that works with planar images of the textile as input. We evaluate our methods using extensive tests that include the industry standard Cusick and demonstrate that both of them produce drapes that strongly correlate with the ground truth estimates provided by lab equipment. Our method is the first to propose such a simple capture method for mechanical properties outperforming other methods that require testing the fabric in specific setups.

Resources

- Eurographics Digital Library

- Preprint [PDF, 17.14MB]

- Supplementary material [PDF, 46.5 MB]

- Dataset [ZIP, 674.4 MB]

Results

We can see a dress made with two different materials: a black denim (265gsm, 75% cotton, 25% polyester), and a red interlock (146gsm, 100% modal). Both materials were digitized using capture equipment (Ground Truth) and both of our methods (MECHMET and MECHIM). The images show how the black and red materials are significantly different from each other, while the three versions (Ground Truth, MECHMET and MECHIM) of each material are almost identical.

Bibtex

Acknowledgements

This work was funded in part by the Spanish Ministry of Science, Innovation and Universities (MCIN/AEI/10.13039/501100011033) and the European Union NextGenerationEU/PRTR programs through the TaiLOR project (CPP2021-008842). Dr. Elena Garces was partially supported by a Juan de la Cierva - Incorporacion Fellowship (IJC2020-044192-I).